Google AI Will Take Any Meaningless Phrase You Give It and Give It a Meaning

Google will define nonsense for you; you just have to ask.

Published April 25 2025, 12:54 p.m. ET

Although AI has been integrated into more and more of our lives over the past few years, those integrations have not always been smooth. AI has been prone to do something called hallucinate, which is when it presents false information as if it's true or simply makes something up.

Those hallucinations can be either serious or silly, and when they're silly, things can quickly get out of hand. Case in point: when one X (formerly Twitter user) noticed that you could string any group of words together, and Google's Gemini AI would see it as an idiom or common expression and give it a meaning. "Two dry frogs in a situation" is just one example. Here's what we know.

Google's Gemini AI thinks "two dry frogs in a situation" is a common expression.

In a post by Matt Rose (@rose_matt), he explained that he had asked Gemini for the meaning of a string of words that don't mean anything and got an explanation.

"Learnt today that you can type any nonsense into Google followed by 'meaning,' and AI will assume you're searching a well-known human phrase and frantically come up with what it thinks it could mean," he wrote.

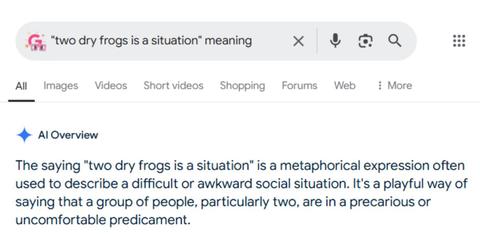

The example that Matt used was "two dry frogs in a situation," which Gemini explained was a "metaphorical expression often used to describe a difficult or awkward social situation. It's a playful way of saying that a group of people, particularly two, are in a precarious or uncomfortable predicament."

The results if you search the term now have the AI removed, probably because this was not the best look for Gemini.

As this incident makes clear, Gemini will sometimes just make something up if it doesn't have a good answer, assuming that the person searching for the term has something in mind. Although this is a fairly low-stakes example, it speaks to the way these results are unreliable, especially when you're searching for something that might be relatively obscure.

Other people tried the same thing.

After Matt posted his example on X, other users tried their hands at creating an idiom that is meaningless, and seeing what kind of definition Google gave it.

One person tried this with the phrase "feelings can be hurt, but fish remember," and got the explanation you might expect someone who didn't study for the test to give if they had to answer the question anyway.

Another person tried "big winky on the skillet bowl," which isn't an idiom now but probably should be in the near future.

While it didn't work for every user, many found that they could get made-up definitions for their made-up phrases, signaling that AI is, above all else, designed to give users an answer, even if that answer is misleading or downright incorrect.

We haven't gotten to the stage yet where AI is comfortable admitting what we don't know. As anyone who has ever been to therapy knows, though, admitting what you don't know is at least half the battle.