Apparently, AI is Stealing Everything in Microsoft Word — Viral Video Sparks Backlash Against Tech Giant

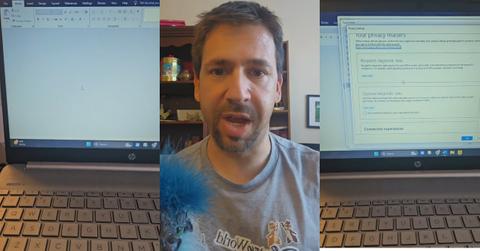

He shows how to disable this function.

Published Nov. 16 2024, 5:00 a.m. ET

Author Christopher Mannino's (@parentingandbooks) viral TikTok video has shocked the internet racking up 1.2 million views in the process. But are we really shocked anymore? In the clip, Mannino films his computer screen while speaking from behind his phone, exposing what he calls an alarming change to Microsoft's AI policy for Microsoft Word users.

He guides viewers through a series of settings that, unless changed manually, allow Microsoft to use all of a user's documents for AI training purposes.

"Anybody else who's writing on Word, this is a must-hear," Mannino begins, as he walks viewers through how to navigate to File Options, Trust Center, and Privacy Settings. It's here where a hidden checkbox labeled "Connected experiences" needs to be unclicked to stop Microsoft from scraping every document on Word.

"You are giving Microsoft permission to scrape all of your data," Mannino warns. The outrage was palpable in the comment section, with viewers slamming the tech giant for hiding this setting so deeply.

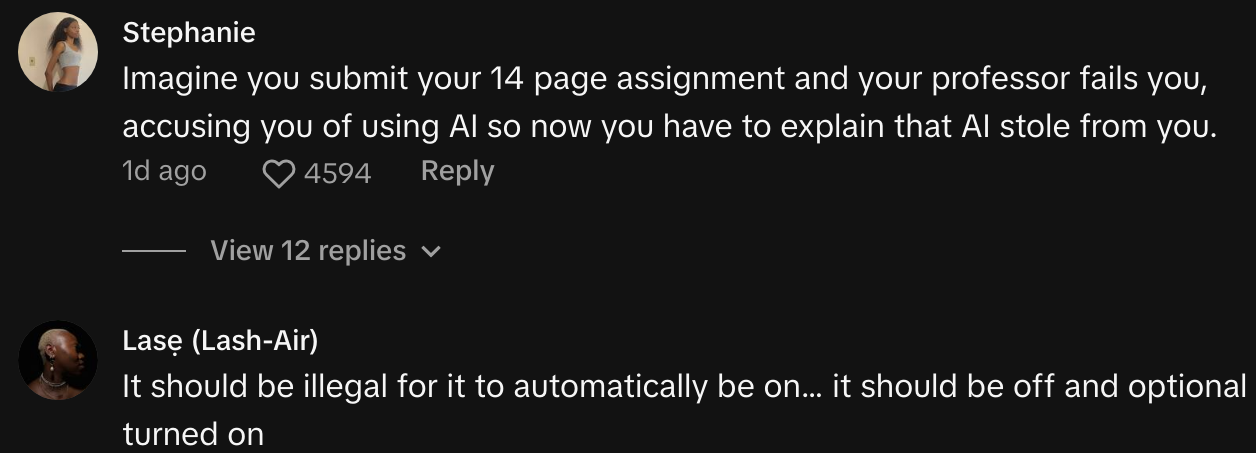

One user wrote, "The fact that the setting is so hidden too just tells us that they KNOW it's wrong." Another vented, "AI everything. Subscription everything. They should pay us a subscription fee to use our work."

Others pointed to a bigger issue at play: "THIS IS WHY WE NEED AI REGULATIONS YESTERDAY."

Mannino's video has continued the conversation about the role of AI in our daily lives — particularly as it relates to personal privacy and intellectual property. As tech companies roll out AI-assisted features more aggressively, concerns over user consent, data ownership, and regulatory oversight continue to grow.

The incident serves as yet another reminder of the deepening entanglement of AI with our everyday tools and the ethical and legal minefields that come with it.

Thus, the conversation around AI regulation in America is heating up, but the pace of change remains sluggish compared to the rapid advancements in AI technology.

While the European Union has proposed and enacted comprehensive regulations under the EU AI Act, the United States has been slower to act. The U.S. government has begun to pay attention to the need for AI regulation, but it's largely been a patchwork effort, consisting of state-level initiatives and federal agencies issuing guidelines without much enforcement power.

President Biden's recent executive order aims to push for greater safety and oversight of AI technologies, but some say it lacks the full weight of legislation that could meaningfully regulate data use and consumer privacy.

The call for AI regulation isn't just about individual privacy, either; it’s about ensuring that the tools we use empower rather than exploit us. Commenters like those on Mannino's video are voicing frustrations that resonate. Many feel like companies are leveraging people's creative work, school assignments, and personal data to enhance AI systems while offering little in return to the individuals whose data they benefit from.

As one commenter succinctly put it: "Imagine you submit your 14-page assignment and your professor fails you, accusing you of using AI so now you have to explain that AI stole from you." It’s a vicious cycle, exacerbated by a lack of regulatory guardrails.

Another growing area of concern is the impact of AI on education. As students increasingly use tools like ChatGPT to help write papers, the ethics of AI-assisted academic work have slipped into gray areas. Some see AI as a powerful tool that can help students brainstorm ideas or outline their work with more creativity. Others view it as a threat to academic integrity, as it opens the door to outright cheating.

The prevalence of AI-generated essays has pushed many educational institutions to take a hard stance against its use, while others have opted to adapt, focusing on teaching students how to use these tools responsibly rather than outright banning them.

AI is blurring the line between assistance and deception. The moral quandary of relying on AI in education boils down to the intent and the transparency of its use. Is a student using AI to enhance their understanding of a subject or to circumvent learning altogether?

These are the kinds of questions that educators are grappling with, as more students lean on AI to complete assignments. Some have suggested that a new code of academic conduct — one that integrates the responsible use of AI — is needed to address this evolving landscape.

The video by Mannino, and the subsequent discussion, is yet another reminder of how deeply AI is becoming embedded in our day-to-day —and how crucial it is that we address the ethical and regulatory gaps that have emerged as a result.

Whether it's tech companies scraping data without explicit consent or students using AI to write papers, it's clear that a more structured approach to AI regulation is overdue. Until then, users need to stay informed, cautious, and proactive in protecting their work.