Google Searches That Show Racism Embedded In the Algorithm

Updated Oct. 23 2018, 4:10 a.m. ET

Racism existed before search engines, but Google has consistently not worked to prevent it.

Let’s look at some times Google’s algorithms could have done better.

1. The now classic example: the difference between searching for “three black teenagers” and “three white teenagers”

2. That time Google insisted that white people do not steal cars.

3. This Instagram user’s child began to Google “Why are asteroids…” when Google decided to take over and ask “Why are asians bad drivers?”

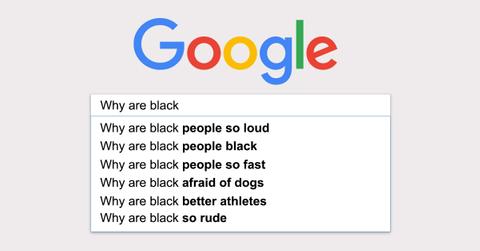

4. And it's not the first time Google has reinforced racial stereotypes through their suggested text.

5. Here are some claims Google makes about Jewish people.

6. And here's a stereotype the search engine feels is worth perpetuating.

Now, let's dig a little deeper into the history of racist Google and the search engine's responses to complaints. Remember the example we used in #1?

In 2016, 18 year old Kabir Alli Googled a simple phrase: “three black teenagers.” What appeared went viral: mugshots of black teenagers. When he googled “three white teenagers” the result was completely different: happy groups of white teenagers laughing and hanging out.

The outpouring of anger led to an apology from Google but also a statement that there was little they could do regarding the algorithm. Since this occurrence, people have tried to get Google to take for responsibility for their algorithm and recognize that the search engine is not neutral.

In her book, Algorithms of Oppression: How Search Engines Reinforce Racism, published this year by NYU Press, Safiya Umoja Noble explores how Google does not do enough to combat racism in the search engine and actually reinforces racial stereotypes. Noble, an Assistant Professor at the University of Southern California teaches classes on the intersection of race, class, and the internet.

At Google in 2017, 91% of employees were white or asian. Additionally, only 31% of their workforce identified as women. When it comes to the tech portions of the corporation it gets even worse, with only 20% women and 80% men employees.

Noble argues that algorithms are not neutral: they are created by people and filled with bias. Based on her extensive research, Noble believes that Google must take responsibility for the racism of the search engine. Algorithms are composed in computer code, and like all languages, this language reflects the culture it is created in.

Noble found that Googling “black girls” quickly leads to porn. It also doesn’t take long when Googling “asian girls” to find sexualized women, wearing little clothing. When Googling “successful woman,” she most often found images of white women.

When these instances emerge, Google always blames them on their “neutral” algorithm and does small fixes to those specific searches to change them. Noble argues that Google needs to take responsibility and have a larger reckoning, completely reworking algorithms.

Instead of opening up portals of information and increased understanding across difference, Google is reinforcing old stereotypes and giving them new life. To describe this, Noble coined a term “technological redlining” echoing racist housing practices of the second half of the 20th century. Today, technological companies are making invisible the way their programs and algorithms make decisions, and are effectively hiding the biases.

As Safiya Umoja Noble recognizes, there are so many ways Google and other search engines could do better.

First, tech companies need to recognize that algorithms are not inherently neutral. There is no easy fix for widespread biases, but a more diverse workforce could begin to help these issues.

Google is just the beginning, Noble hopes other tech companies like Yelp will learn similar lessons.